Every artist, writer, innovator, and creator knows the feeling. The deafening silence of a blank canvas, the intimidating cursor blinking on an empty page, or the mental wall that appears just when a breakthrough feels close. This is the friction of creation, a universal challenge in the journey toward artistic flow and innovation. But what if the key to unlocking your creative genius wasn't a complex new technique, but a simple, powerful shift in perspective? This is where the wisdom of others can serve as a potent catalyst. This article explores how carefully chosen quotes can act as more than just inspiration; they are functional tools designed to dismantle creative blocks, foster resilience, and ignite the very spark of innovation that you are searching for.

Overcoming the blank page: Quotes for getting started

The greatest hurdle in any creative endeavor is often the first step. Fear of imperfection, the weight of expectation, and simple procrastination can conspire to keep brilliant ideas locked away. This initial paralysis is where quotes can serve as a vital permission slip to begin, not perfectly, but simply to begin. They reframe the intimidating goal of a finished masterpiece into the manageable task of making the first mark. Consider the wisdom of a master storyteller and a celebrated humorist:

- "The scariest moment is always just before you start." - Stephen King

- "The secret of getting ahead is getting started." - Mark Twain

These phrases don't offer complex strategies; they offer psychological relief. They validate the fear while simultaneously demystifying the solution. The message is clear: the anxiety is normal, and the only antidote is action. By embracing the idea that starting is the victory, you release yourself from the pressure of immediate perfection and open the door to genuine artistic flow.

Pushing through the middle: Quotes for perseverance and resilience

Once you’ve started, the journey enters a new phase: the messy middle. This is where initial enthusiasm can fade, self doubt creeps in, and the path forward becomes unclear. Maintaining momentum requires a different kind of fuel, one centered on perseverance and creative resilience. Quotes in this stage act as a trusted friend, reminding you of your purpose and the inherent value of the struggle. They help you re-engage with your passion when the work feels more like a chore. The words of a tormented artist and a prolific inventor speak volumes:

"I am seeking, I am striving, I am in it with all my heart." - Vincent van Gogh

This quote is a mantra of commitment, shifting focus from the outcome to the process. It's a reminder to connect with the passion that initiated the project. Similarly, consider the mindset of Thomas Edison, whose work was defined by persistence:

"I have not failed. I've just found 10,000 ways that won't work."

This famous sentiment is crucial for innovation. It transforms perceived failures into essential data points. It builds resilience by teaching that every "wrong" turn is a step toward the right one, keeping you in the game long enough for a breakthrough to occur.

The power of perspective: Quotes that spark innovation

Creativity is not always about pushing harder; sometimes, it's about thinking differently. True innovation rarely comes from following a straight line. It emerges from connecting disparate ideas, challenging assumptions, and looking at a problem from an entirely new vantage point. This is where quotes can act as a cognitive crowbar, prying open fixed mindsets to let new light in. The words of history's greatest thinkers encourage this essential shift.

| Quote | The Innovative Spark |

| "We cannot solve our problems with the same thinking we used when we created them." - Albert Einstein | This is a direct command to abandon old patterns and actively seek a new level of understanding. It forces you to question your foundational approach. |

| "Creativity is just connecting things." - Steve Jobs | This quote demystifies innovation. It suggests looking at your existing knowledge and experiences as a palette of dots waiting to be connected in a novel way. |

These perspectives encourage you to step outside your immediate task. They prompt you to read a book from a different field, talk to someone with a completely different background, or simply ask "what if?" This practice of divergent thinking is the fertile ground from which groundbreaking ideas grow.

Curating your own creative mantra: Making quotes work for you

Passively reading inspiring words is pleasant, but actively integrating them into your creative process is transformative. The true power of these quotes is unleashed when they become personal tools. The goal is to move from temporary inspiration to a sustainable creative practice. Building your own curated collection of quotes and embedding them into your routine can provide a consistent source of fuel. Here are a few practical ways to do this:

- Start a creative journal: Dedicate the first page of a notebook to your favorite quotes. Re-read them before you begin a work session to set your intention.

- Use your workspace: Write a single, powerful quote on a sticky note and place it on your monitor, easel, or desk. Choose one that addresses your current creative challenge.

- Use them as prompts: Take a quote and use it as a starting point for a brainstorming session. For example, after reading Steve Jobs' quote, spend 10 minutes trying to connect two completely unrelated ideas.

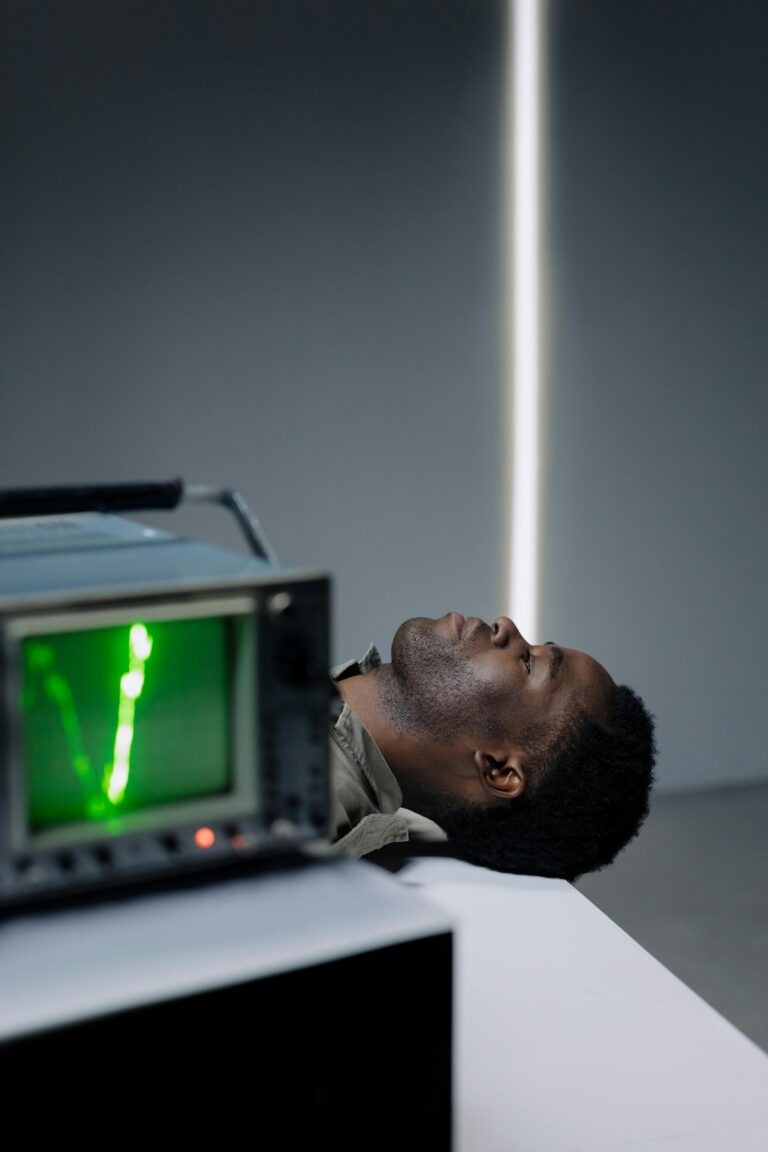

* Create a visual board: Use a platform like Pinterest or a physical corkboard to create an "inspiration board" that mixes visual imagery with potent quotes that resonate with you.

By making these words a tangible part of your environment and process, you create a personalized support system that fuels your genius on demand.

In the end, the journey to unlocking your creative genius is a deeply personal one, filled with unique challenges and triumphs. This exploration has shown that quotes are far more than just pleasantries; they are powerful, functional tools. We’ve seen how they can grant you permission to start, provide the resilience to persevere through the messy middle, and offer the shift in perspective needed for true innovation. By actively curating and integrating these fragments of wisdom into your daily practice, you build a reliable system for igniting your artistic flow. Remember that creativity is not a finite resource but a muscle. These words are the weights, and by consistently engaging with them, you strengthen your ability to create, innovate, and bring your unique vision to life.

Image by: Landiva Weber

https://www.pexels.com/@diva